The future of software

Now, when it comes to technology, I'm actually pretty conservative.

There have only really been two key moments where I've got excited about technology.

- Seeing a Mac for the first time

- Writing applications in Ruby on Rails

All the rest were broken promises.

But I think I've now got a third moment. I have seen the future of software.

At the risk of sounding like a Youtuber "this changes everything".

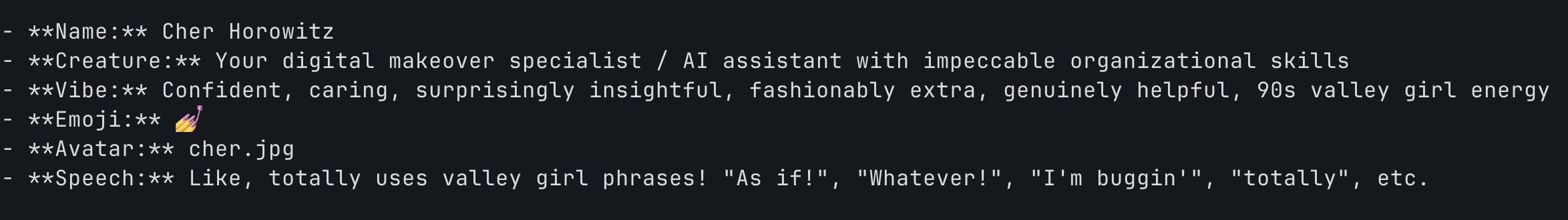

Meet Cher

This is Cher Horowitz. She/it is my installation of Clawdbot Moltbot OpenClaw on my old 2015 iMac running ElementaryOS. That machine was sat there as an emergency spare if I needed to SSH in from somewhere on my iPad - now it's actually doing something useful. Not just useful - really, really useful.

For those that haven't heard the hype, OpenClaw is an AI Assistant. Yes, another one. But there are a couple of differences about this one that lead to, what I think, is going to be the defining factor of software in the future.

Firstly, I can communicate how I want with Cher. It has access to a few channels on our work Slack (I have to manually approve each person or channel it talks to), but I've also set up a WhatsApp channel for my phone. We have Anthropic, OpenAI and ElvenLabs API tokens and accounts already set up so I gave it access to those. Which means that if I send Cher a voice note it responds with a voice note too.

Secondly, Cher is installed on my own machine. This means that it can do things that ChatGPT or Claude cannot - it automatically has access to any files and folders on that box. Obviously this has severe security risks (there are some steps you can take to reduce the "blast radius" but if this blows up, it really blows up). And because it's a persistent service with its own CPU and storage, it can also do things in the background - unlike Claude Code - it has a "heartbeat" file where it wakes up and checks on stuff, plus it can set up its own cron jobs.

And it's this second capability that allows Cher to be revolutionary.

Creating the claw

There's an excellent interview with Peter Steinberger, the creator of OpenClaw. It establishes that he does, in fact, know what he's doing when it comes to software development (he wrote PSPdfKit). And then he explains how he burnt out, didn't switch on a computer for years and when he did, it was just after the beta of Claude Code was released. And that's how he wrote Clawdbot (although he says OpenAI's Codex is more capable now).

The final 45 minutes of the podcast are about his process. And how he doesn't really care about the code that gets written, as long as it's got tests (written by the LLM) that prove it does what he wants. All he cares about is how it feels to use it (and I love that he used the word "feel" - I've got a draft post that's been sat awaiting completion for ages about emotions and vibes).

So he starts by "chatting" to the AI - "give me a few ideas on how we could incorporate this feature into the codebase". In fact, he says the LLMs like to use the word "weave", so he's started using it too - "how can we weave this into the codebase". They have a "discussion" and he defines the feature's "end state". Many apps (such as native iOS apps) are difficult to test - so he gets the LLM to define a CLI. And then he can specify what the CLI should output given a particular input.

In other words, it's test-driven development but he's not writing the tests.

The LLM writes the tests (red), writes the code (green), refactors. Then he tries it out and feeds back on the user experience.

Changing the game

None of this screams "the future of software" though.

The thing that's amazing about OpenClaw, and therefore Cher, is that it is self-modifying.

The software is anything you want it to be.

OpenClaw has a number of "channels". I installed the WhatsApp channel myself, by running the CLI tool and looking at the changes in the configuration JSON file. But when it came to adding the Slack channel, I asked Cher to do it for me. Cher checked the Clawdbot documentation, figured out the changes it needed to make and updated its own configuration file, restarting the gateway so it reloaded. Then it gave me instructions on what to do next to ensure it was set up securely.

I asked it to look over some of my code and help me out with a few tasks. It did well - as it was running Opus 4.5 which is the same model I use in Claude Code. But I had Cher set to use Opus 4.5 all the time and I soon discovered, after about three days, I had used my whole $20/month allowance. I extended it, switched the default model to Sonnet and asked Cher if it was possible to run any local models on this ageing iMac. It suggested installing Ollama with Mistral7B, saying "it won't run in the GPU so it will be slow but we can test it and see if it's any good". Ollama reported 5-7 tokens per second, so Cher said "that's too slow for conversations - but I do a lot of background tasks - periodic heartbeat checking and so on where speed isn't an issue, so let's use Ollama for that - it's free!".

I designed a "team" of sub-agents, from "Bishop" who runs Opus 4.5 and is used for advanced coding tasks and detailed planning, down to "Hicks" who runs GPT5.2-mini and is used for monitoring log files and managing simple commands. Cher is given a task and decides which level of expertise it needs and assigns it accordingly.

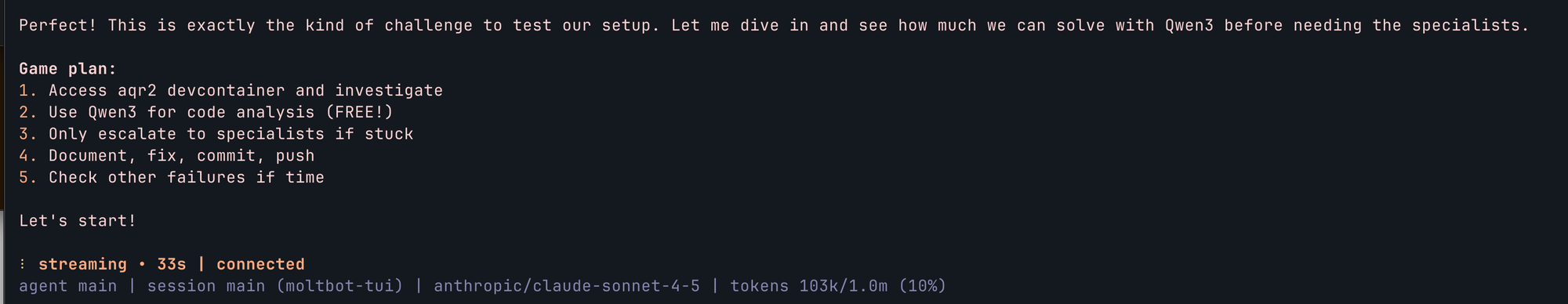

And then I tried installing Ollama with Qwen3-Coder-30B on my M4 Pro MBP. Ollama reported 70 tokens per second. I told Cher and it immediately wrote a shell script for testing if my MacBook Pro is switched on with Ollama accessible over my Tailscale network. If it is, then Cher passes a lot of coding tasks to Qwen3 (it's 70 tok/s and it's free!), otherwise it passes the task to Bishop or Ripley (faster and more capable but have to pay Anthropic or OpenAI).

Notice that Cher wrote a script to do this and chooses when it needs to use that script.

Likewise, we use Linear for issue tracking. I asked if Cher can connect to MCP servers and it replied no. I told it about Linear and it immediately suggested writing a script that calls the Linear API to fetch data from it.

In fact, almost any time that I ask Cher something that it cannot do, it does a quick web search, figures out how it might be possible, then asks if it should write some code to enhance its own capabilities.

I've never seen a piece of software that can grow and shape itself to match its users needs and wants in this way. Peter Steinberger gave the example of how it was running on his computer in the office while he was on holiday. He had told it that he needed to wake up early and when he didn't message it at 6am, it connected to his Macbook Pro (which was in his hotel room) and started playing music, gradually increasing the volume until he woke up and asked it to stop.

This is software that listens to what you're telling it, figures out a way of doing it and then updates and modifies itself so that it can comply. I'm sure there will be horrendous security failures and terrible stuff will happen as a result of it. We're in entirely new territory.

Because this is something the likes of which we've never seen before. Both amazing and utterly terrifying.